The ChatGPT API is part of OpenAI’s developer platform, providing access to advanced conversational AI models.

It lets applications send message sequences (prompts, instructions, or conversation history) to the model and receive AI-generated responses.

In practical terms, this means any app – from chatbots and customer support tools to content generators and productivity assistants – can incorporate the same powerful language understanding that powers ChatGPT.

OpenAI confirms that the ChatGPT API uses the GPT‑3.5 Turbo model (the one behind ChatGPT), so developers get state-of-the-art chat capabilities at scale.

In short, the ChatGPT API brings GPT-3.5 (and higher) chat functionality into your code, enabling human-like dialogues and content creation in your applications.

What Is the ChatGPT API?

The ChatGPT API is essentially the Chat Completion feature of the OpenAI API.

Instead of supplying a single text prompt, you send a sequence of messages (each labeled with a role such as system, user, or assistant) and the API returns a model-generated reply.

This new “chat” interface is more structured and context-aware than traditional text-completion endpoints.

Under the hood, OpenAI formats these conversations into a token stream using its Chat Markup Language (ChatML) format.

The model consumes this structured input and returns a response as if it were participating in a back-and-forth conversation. This design makes it easy to build chatbots or assistants that remember earlier messages and follow instructions.

- Same Model as ChatGPT: The GPT-3.5 Turbo model used in ChatGPT is available via the API. In fact, OpenAI’s blog emphasizes that the model powering ChatGPT and the one exposed via the API are identical.

- Flexible Usage: You can use the ChatGPT API for any NLP task that benefits from conversational context: drafting emails, writing code comments, generating summaries, answering questions, and more. Unlike a single prompt, you can maintain context by including past user and assistant messages.

Why Use the ChatGPT API?

Integrating the ChatGPT API lets developers add a powerful conversational AI without building models from scratch. Some common use cases include:

- Chatbots & Virtual Assistants: Build intelligent chat interfaces for customer support, e-commerce, or personal assistants. The API handles dialogue flow and context, enabling richer interactions than keyword-based bots.

- Content Generation: Generate articles, summaries, social media posts, product descriptions, or marketing copy. The API can take a brief instruction and produce polished, on-brand text.

- Customer Support Bots: Automatically answer FAQs or route customer queries by phrasing them as user messages and letting the model craft helpful responses.

- Productivity Apps: Embed AI helpers in emails, document editors, IDEs, and more. For example, a productivity app could use the API to rephrase or proofread user text, or to schedule tasks via natural language.

- Learning & Tutoring: Create educational tools where the API acts as a tutor or practice partner, answering questions and explaining concepts conversationally.

Because the API provides ready-to-use language understanding, developers can focus on integrating intelligent features rather than managing machine learning models.

OpenAI even reports major platforms like Snapchat and Shopify using the GPT-3.5 API to power chat assistants and shopping recommendations, showing the scalability of this approach.

In summary, the ChatGPT API unlocks GPT-powered features for any software project.

Getting API Access (Sign Up & API Keys)

To start using the ChatGPT (GPT-3.5 Turbo) API, follow these steps:

- Create an OpenAI Account. Go to the OpenAI Platform or ChatGPT site and sign up (or log in). A free account is needed to access the API dashboard.

- Verify Your Account. You may need to confirm your email or phone number. This helps OpenAI link usage to your account and billing.

- Set Up Billing. The API uses a pay-as-you-go billing model. In the OpenAI dashboard, navigate to Billing and add a payment method. Without adding billing information, your API key will not be active. (Note: new users often get free trial credits, but a valid payment method is still required for paid usage.)

- Generate an API Key. In the dashboard, go to API Keys (or View API Keys) and click Create new secret key. Give it a name (e.g. “ChatGPT-App”) and create it. Copy the key immediately and store it somewhere secure; you won’t be able to see it again. Each key is long and looks like

sk-…. Never share this key publicly. - Optionally, Set Usage Limits. To control costs, you can set hard and soft usage caps under Usage Limits in the dashboard. For example, you can cap total tokens per month. This prevents runaway charges in development or production.

⚠️ Keep Your Key Safe: Your API key is like a password. If someone else gets it, they can use your credits and data.

Use environment variables or a secrets manager to store the key, and never hard-code it or check it into source control. As OpenAI warns, “keep this API key safe at all times.

if someone finds your key, they could use it themselves, consuming credits… or even using it as an attack vector”. If a key is exposed, you can always delete it in the dashboard and generate a new one.

Key Endpoints & Documentation

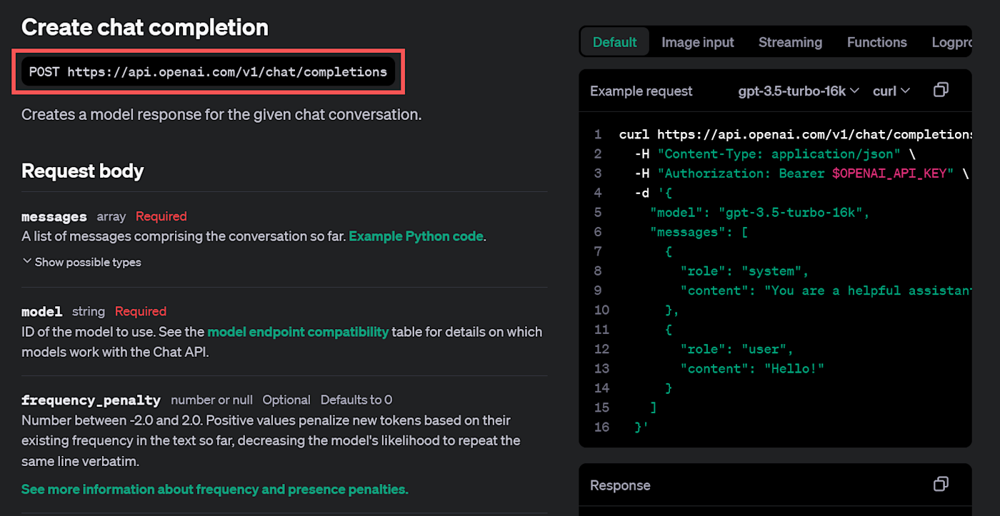

The primary endpoint for conversational AI is the Chat Completion endpoint:

POST /v1/chat/completions– Generates a chat-based completion given a sequence of messages.

OpenAI’s documentation provides details for this endpoint. You can find the chat completions API reference on the OpenAI docs (see the “API reference” tab in the dashboard).

For example, a typical API request uses HTTPS POST with a JSON body. Below is a simplified view (from OpenAI’s docs) of calling the chat endpoint with curl:

curl https://api.openai.com/v1/chat/completions \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"model": "gpt-3.5-turbo",

"messages": [

{"role": "user", "content": "What is the OpenAI mission?"}

]

}'

This screenshot from the OpenAI docs highlights the /v1/chat/completions endpoint and an example request. The request body must include:

"model": the model name (e.g."gpt-3.5-turbo")."messages": an array of message objects, each with a"role"("system","user", or"assistant") and"content".

For example, setting a system message can guide the assistant’s behavior, like {"role":"system", "content":"You are a helpful assistant."}. A user message contains the actual prompt or question.

The response returns a JSON with one or more message choices (the AI’s replies) and usage info. See OpenAI’s [API reference][OpenAI Docs] for all parameters (temperature, max_tokens, etc.) and other endpoints.

Code Examples (Python & Node.js)

Once you have your key, you can call the API from your code using HTTP or an SDK. Here are simple examples:

- Python (using

openailibrary): Install the library (pip install openai). Then: pythonCopyEdit

import os

import openai

openai.api_key = os.getenv("OPENAI_API_KEY") # or set directly (not recommended)

response = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "How do I integrate the ChatGPT API?"}

]

)

answer = response.choices[0].message.content

print("ChatGPT:", answer)This sends two messages (a system instruction and a user question) and prints the assistant’s reply. The choices[0].message.content holds the response text.

Node.js (using openai SDK): Install the SDK (npm install openai). Then:

import { Configuration, OpenAIApi } from "openai";

const configuration = new Configuration({ apiKey: process.env.OPENAI_API_KEY });

const openai = new OpenAIApi(configuration);

async function askChatGPT() {

const res = await openai.createChatCompletion({

model: "gpt-3.5-turbo",

messages: [

{ role: "user", content: "Can you give an example of using OpenAI API in JavaScript?" }

]

});

console.log("Assistant:", res.data.choices[0].message.content);

}

askChatGPT();

- This example prints the assistant’s message to the console. Note the similar structure: you supply

modeland amessagesarray, and read thechoicesfromres.data.

In both cases, the SDK takes care of setting the right headers (Authorization: Bearer YOUR_KEY and Content-Type: application/json). You could also make raw HTTP requests (e.g. using fetch in JS or requests in Python) by hitting the https://api.openai.com/v1/chat/completions URL as shown above.

Common Use Cases and Examples

Developers use the ChatGPT API in many creative ways. Here are some typical scenarios:

- Multiturn Conversations: Maintain context by passing all messages. For example, first ask

"What is Python?"and then follow up with"Why is Python popular?"in the same conversation. The model remembers the thread and provides cohesive answers. - Content Creation: Generate blog outlines, social media captions, or product descriptions. You might send a user prompt like

"Write a 3-sentence bio for a software developer."and get a polished result. - Summarization & Explanation: Provide a long piece of text and ask ChatGPT to summarize or explain it in simpler terms.

- Code Assistance: Ask the API to review code, suggest improvements, or explain programming concepts. (GPT-3.5 and GPT-4 are capable coders when prompted correctly.)

- Customer Support: Integrate with chat widgets or messaging apps. The API can draft responses to user inquiries based on a knowledge base or scripted content.

- Q&A Systems: Build FAQ bots by sending user queries as prompts. The model can answer a wide range of general questions out-of-the-box.

- Language Translation & Localization: It can even translate text or write messages in different styles (formal, casual, etc.) by framing the prompt appropriately.

- Productivity Apps: For example, writing emails: an app could send user bullet points and ask ChatGPT to compose a professional email from them.

Many applications will involve combining the ChatGPT API with other systems. For instance, a shopping assistant might use ChatGPT to interpret user queries (“Show me red running shoes under $100”) and then call a product search API.

Or a scheduling bot might use ChatGPT to parse natural language (“Set up a meeting next Tuesday at 3 PM with Alex”) and then interact with a calendar API.

In each case, the ChatGPT API handles the natural language part, while your code handles the business logic. This modular approach is powerful for building intelligent features quickly.

Pricing, Tokens, and Usage Limits

Using the ChatGPT API incurs costs based on token usage. OpenAI charges per 1,000 tokens (where tokens are chunks of text, roughly ~4 characters each).

For ChatGPT (GPT-3.5 Turbo), the price is quite low: $0.002 per 1K tokens (both prompt and completion tokens are billed at this rate). For example, a prompt of 500 tokens that yields a 500-token response would cost about $0.002.

For reference, the more advanced GPT-4 model costs more. The standard GPT-4 (8K context) is $0.03 per 1K prompt tokens and $0.06 per 1K completion tokens.

OpenAI even offers a GPT-4 Turbo version with larger context (128K) at $0.01/$0.03 per 1K. Consider this when choosing models: GPT-4 can handle harder tasks and longer context, but at higher cost. Below is a quick comparison:

| Model | Context Length | Use Case Examples | Price (Prompt) | Price (Completion) |

|---|---|---|---|---|

| GPT-3.5 Turbo | ~4K tokens | General chat, content | $0.002 / 1K tokens | $0.002 / 1K tokens |

| GPT-4 (8K) | ~8K tokens | Complex reasoning, nuanced text | $0.03 / 1K tokens | $0.06 / 1K tokens |

| GPT-4 Turbo (128K) | 128K tokens | Very long docs, scaling | $0.01 / 1K tokens | $0.03 / 1K tokens |

Because pricing is pay-as-you-go, it’s important to monitor your token usage. The API response includes a usage object showing how many tokens were used for the prompt and completion. For example, a response might include:

"usage": {

"prompt_tokens": 14,

"completion_tokens": 30,

"total_tokens": 44

}

This lets you calculate cost (total tokens × model rate). Always track this to manage your budget.

Free Trial & Quotas: Historically, new OpenAI accounts received some free credits (e.g. $18) to experiment. Check the OpenAI billing page for current promotions.

Note that once free credits are used, you must have billing enabled to continue calls.

Rate Limits: OpenAI also enforces rate limits on how many requests or tokens you can send per minute (often described as RPM/Tokens-per-minute).

Exact limits depend on the model and your account tier. For example, GPT-4 models generally have lower rate limits than GPT-3.5 Turbo because they require more compute.

If you exceed the limit, you’ll get an HTTP 429 error (“Rate limit reached”). To handle this, implement exponential backoff retries and respect the x-ratelimit headers in the response.

You can also request higher rate limits from OpenAI if needed.

Best Practices for Integration

To make the most of the ChatGPT API and build reliable apps, consider the following best practices:

- Prompt Engineering: Craft your prompts carefully. Use the

systemrole to set context or instructions (e.g., “You are a helpful assistant specialized in XYZ”). Provide clear, concise user messages. For multi-turn conversations, send all relevant history in themessagesarray to maintain context. - Token Management: Be mindful of token budgets. Shorten unnecessary prompt text, and use parameters like

max_tokensto cap response length. Monitor theusageobject to see how many tokens are consumed. This helps predict costs and avoid unexpectedly long answers. - Temperature and Parameters: Control randomness with parameters. A low

temperature(e.g. 0–0.5) gives more deterministic, focused replies, which is good for factual answers. A higher temperature (e.g. 0.7+) yields more creative output, useful for brainstorming or creative writing. Experiment withmax_tokens,temperature, andtop_pto fine-tune results for your use case. - Error Handling: Always check for API errors. Common errors include rate limiting (429), invalid requests (400), or authentication issues (401). Implement retry logic for transient errors. For example, on a 429, wait (backoff) and retry. Log errors for debugging. Refer back to the API error messages and docs to correct issues.

- API Key Security: Store your API key securely. Use environment variables or a secrets manager, not plain text. Regularly rotate keys and delete any that may have been exposed. Never expose keys in client-side code or public repos.

- Data Privacy: By default, data sent to OpenAI is not used for training the model (as of recent policies), but double-check the latest data usage policies. Do not send sensitive personal data if not compliant with privacy requirements.

- Testing and Logging: Thoroughly test the conversation logic. Log both inputs and outputs (sanitized if needed) so you can review how the model is behaving. This is crucial for identifying prompt issues or bias.

- Cost Control: Use the lowest capable model for a task. For routine queries, GPT-3.5 Turbo is usually sufficient and much cheaper than GPT-4. Monitor your spending and set hard usage limits in the OpenAI dashboard to avoid surprises.

- Asynchronous Calls: If your application can handle async flows, consider making API calls asynchronously (especially in web apps) since responses take time. Provide user feedback (e.g., a typing indicator) while waiting for the AI’s reply.

Applying these practices will help ensure your ChatGPT integration is secure, cost-effective, and reliable. As one expert suggests: keep keys out of code, experiment with parameters, and always plan for costs and errors.

Next Steps & Resources

You’re now ready to start building with the ChatGPT API. To recap, you need to:

- Sign up and get your OpenAI API key.

- Study the official Chat Completions docs and experiment in the OpenAI Playground if you like (try various prompts and settings).

- Write code (in Python, Node.js, etc.) that calls

openai.ChatCompletion.create(...)or HTTP POST to/v1/chat/completions. Use your API key and handle the JSON response.

OpenAI’s official API reference has full details on all endpoints, models, and parameters.

For example, the chat completions reference (accessible via the “API Reference” link in your dashboard) shows how to structure your requests and interpret responses.

If you want more examples and guides on ChatGPT API integration, explore GPT-Gate.Chat. Our developer guides cover advanced topics like building multi-turn bots, function calling, and more.

Ready to build? Check out GPT-Gate.Chat’s ChatGPT API guides for tutorials, code samples, and tips to supercharge your development. Happy coding, and welcome to the world of AI-powered apps!