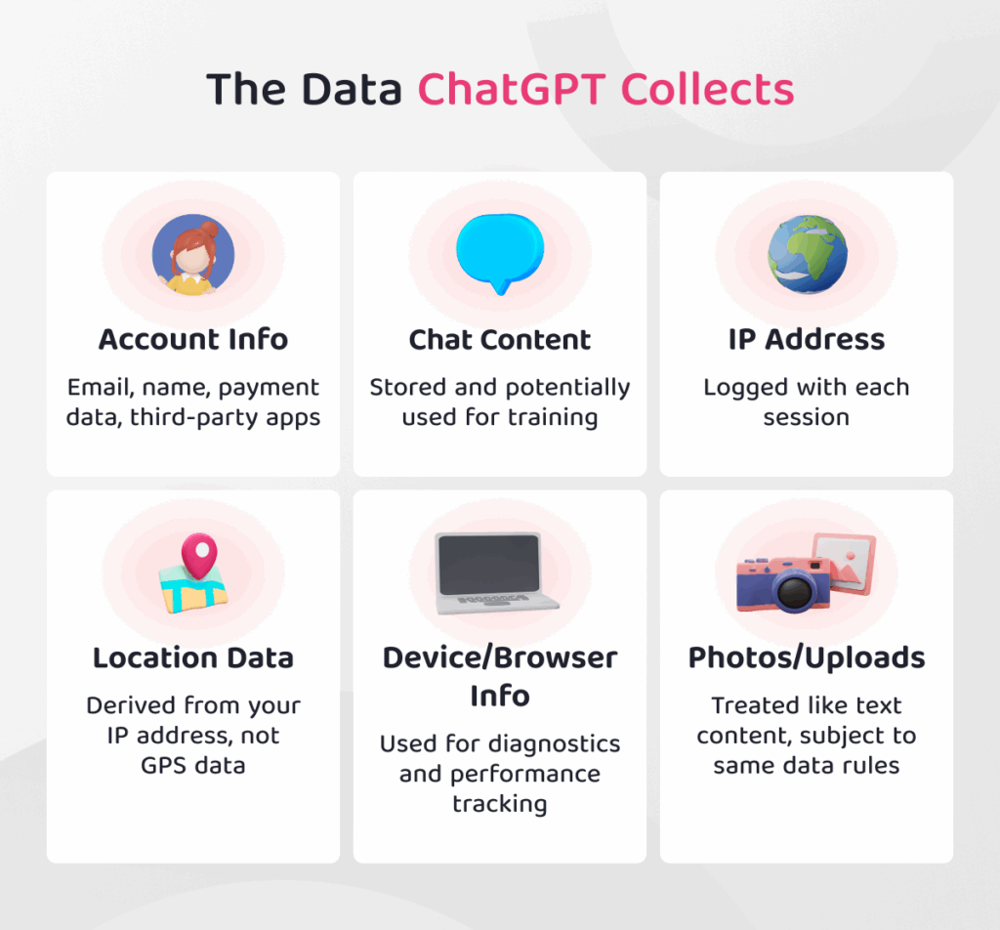

AI chatbots like ChatGPT can be incredibly helpful – but they raise important data privacy questions. ChatGPT collects and processes the information you share (prompts, uploads, etc.) to provide answers, improve its models, and run its service.

OpenAI’s official policies confirm that ChatGPT logs user content (prompts, responses, account details, and technical data) and may use it to improve future models.

For example, if you’re logged in, your name, email, IP address, and any files you upload are tied to your account and stored on OpenAI’s systems (and with trusted service providers).

By default, free ChatGPT accounts do allow their conversation data to be used for model training, while paid business accounts (ChatGPT Team/Enterprise) do not use customer content for training unless explicitly opted in.

ChatGPT collects data like your account info, chat content, IP address, device/browser details, and any uploaded images.

OpenAI encrypts data in transit and at rest, and shares only minimal content with third-party service providers under strict confidentiality.

The official Privacy Policy explains that all user “content” (your prompts, files, images, etc.) and technical logs are stored in OpenAI’s systems and may be used for service improvement.

Crucially, OpenAI’s policies state it does not sell or share your data for advertising purposes.

They also let you delete your account or clear chat history: cleared chats are deleted from OpenAI’s servers within 30 days, and you can submit a data deletion request (which takes up to 30 days).

How ChatGPT Uses Your Data and Privacy Protections

Model training and improvements. Under ChatGPT’s default settings, OpenAI may use the prompts and responses from non-enterprise users to train and improve its AI models.

(Enterprise and Team accounts’ data are not used for training unless the organization opts in.) Importantly, OpenAI emphasizes that its AI does not “copy and paste” personal data.

Its policies say models learn patterns, not store exact personal details. OpenAI also offers a setting: free/Plus users can turn off data sharing in their privacy settings, and “temporary chats” are not used for training.

If you disable history or use a Temp Chat, your conversation won’t be used to improve the model (though OpenAI may still keep it briefly for review or compliance).

Security and human review. OpenAI employs technical and organizational safeguards: data is encrypted in transit and at rest, and only a limited number of authorized staff and contractors can access content for specific purposes (moderation, support, legal compliance, or model improvement).

All access is logged, and humans are trained to protect privacy. OpenAI explicitly advises users: “Please don’t enter sensitive information that you wouldn’t want reviewed or used.”.

This means avoiding real passwords, social security numbers, or proprietary secrets, since employees might need to view content to investigate issues or train the model.

OpenAI also prohibits using ChatGPT to collect or infer sensitive personal attributes about others (and forbids users from prompting it to reveal someone’s private info).

Privacy policy summary. OpenAI’s Privacy Policy (updated Jan 2024) clarifies that it collects your account details (name, contact, payment info), user content (prompts, uploads), and technical/log data (IP address, device/browser info).

It shares data only with trusted subprocessors (for hosting, safety, etc.), never for marketing, and does not sell your personal data.

You have control: you can delete your account, opt out of data use, turn off chat history, or clear conversations. Deleted content is removed within 30 days. In summary, ChatGPT is built with strong security (encryption, access logs) and privacy options, but it’s ultimately a cloud service: you should assume anything you share is stored and possibly seen by OpenAI.

What Not to Share with ChatGPT

Even with protections, you must guard highly sensitive information. Never share personally identifiable info, financial details, passwords, or any confidential secrets in a chat. Security experts warn that ChatGPT is not a secure vault for sensitive data. In practice, you should avoid posting:

- PII (Personal Data): Full names, exact addresses, phone numbers, social security or ID numbers, birthdates, etc.. For example, don’t type “My SSN is 123-45-6789” or “Here is my medical record.”

- Financial/Bank Info: Credit card numbers, bank account details, or exact salary figures tied to you or others.

- Passwords and Login Credentials: Never reveal your passwords or authentication tokens. AI chat can generate convincing phishing content if it learns your passwords.

- Proprietary and Confidential Business Info: Company trade secrets, unpublished code, legal documents, patent ideas, or client lists should not be shared. These are typically protected by NDA and could harm your business if exposed.

- Private Personal Secrets: Personal diary entries, health issues, or intimate details about yourself or others. ChatGPT has no social privacy norms and might inadvertently expose or misuse such info.

Key takeaway: Treat ChatGPT like a public forum or insecure email – don’t type anything you wouldn’t say publicly. The model’s privacy guardrails aren’t foolproof.

For example, security blogs note that users have accidentally posted sensitive prompts or company data to ChatGPT, creating leak risks. OpenAI itself cautions against sharing personal data that you wouldn’t want reviewed.

If you need ChatGPT to process sensitive info (say, code with credentials), strip or anonymize it first: use placeholders (e.g. “<username>”) or generalize details.

Comparing AI Tools: ChatGPT, Google Bard (Gemini), and Claude

Different AI platforms have different privacy models. Here’s how ChatGPT’s approach stacks up against Google’s Bard/Gemini and Anthropic’s Claude:

- ChatGPT (OpenAI): As described, ChatGPT (free/Plus) may use your prompts and responses for training unless you opt out. It logs chats indefinitely by default (unless history is turned off) and stores them for service and moderation. Enterprise plans do not use data for training and offer admin-controlled retention. OpenAI encrypts data and limits access to authorized personnel.

- Google Bard/Gemini: Google’s AI assistant collects and uses data as part of the user’s Google Account. According to Google’s Gemini Apps Privacy Notice, all your chats, uploads, feedback, location, and connected-app info are collected, and used to improve Google products. By default, Google saves your Bard/Gemini conversations (if you have “Web & App Activity” on) for up to 18 months (you can change this setting to 3 or 36 months). Human reviewers also read Gemini chats (with your ID detached) to improve the model. Even if you turn off Bard/Gemini Activity, Google holds your last conversation for 72 hours to operate the service. In short, Google’s model is to use your data broadly to enhance AI features; like OpenAI, they do not use it to target ads, but it is part of your Google Account data subject to Google’s policies. Users must rely on Google’s settings and activity controls to manage their data.

- Anthropic Claude: By default, Claude’s free/pro versions are privacy-preserving: they do not use your inputs or outputs to train their public models. Claude only “ingests” data if you explicitly opt-in (e.g. by feedback) or report a bug. If a prompt violates Claude’s policy (e.g. disallowed content), that prompt may be used internally to improve safety systems, but ordinary chats are not. Users can delete conversations at any time, and Claude clears deleted chats from its servers within about 30 days. In contrast, Claude’s enterprise/API customers also default to no-training usage. Notably, Anthropic embeds “privacy by design” into Claude: its AI is trained (via “Constitutional AI”) to avoid repeating personal data and to protect privacy.

Overall, Claude offers stronger default privacy (no training on your data) than ChatGPT and Bard. ChatGPT Enterprise/Team and Claude Enterprise do not share data for model training; free ChatGPT and Bard/Gemini do share for improvement.

In all cases, avoid sensitive inputs. All platforms encourage users not to input highly confidential info (and provide ways to delete history).

Addressing Common Concerns

- Can ChatGPT leak my private data? ChatGPT itself doesn’t “announce” your personal data to others – it doesn’t have a memory of individual users that it can query. However, if you share something sensitive, OpenAI records it and it could inadvertently influence future outputs. For example, security analysts have shown that training data (often scraped from the web) can cause models to repeat personal data or proprietary code under some circumstances. OpenAI’s safeguards (filtering, privacy policies, and not training on new data if opted out) reduce this risk, but not zero it out. More practically, the bigger leak risk is human: OpenAI moderators or engineers could read flagged or opt-in content (though they’re trained to protect privacy). The bottom line: Don’t feed it secrets.

- Is ChatGPT safe for personal or business use? It’s safe for general-purpose queries: language help, education, coding without sensitive details. OpenAI has designed ChatGPT with strong security and transparency, and it does not maliciously exploit your data. But it’s not end-to-end encrypted or isolated per session: anyone with admin privileges at OpenAI (or a security breach) could access prompts. Businesses worried about confidentiality often restrict ChatGPT use to approved data, and some use enterprise versions that log less. Many companies even banned ChatGPT for proprietary work, or require redacting secrets. For personal use, treat ChatGPT like a web search: it’s fine to ask about general topics, but don’t paste your journal, tax returns, or private messages into the prompt.

- Will ChatGPT remember my info across sessions? No persistent “memory” ties your name or previous chats to new ones (unless you use the same account and leave history on). ChatGPT doesn’t recall past conversations once closed. But OpenAI does store your chat logs on its servers (visible in your history tab if enabled) so it’s not ephemeral. So it won’t spontaneously bring up something you told it weeks ago, but if you sign in and look at your history, it’s there.

- What about real examples of data exposure? Aside from speculation, there have been isolated incidents. For instance, in early 2023, some ChatGPT users reported occasionally seeing other users’ prompts in their interface due to a bug. OpenAI fixed that promptly. More broadly, reports have surfaced of ChatGPT answering questions with detailed company-specific info (like exact salaries or patent details) that likely came from its pre-training data. This shows AI can regurgitate proprietary or personal data if it’s already out in the world. Thus, even without new leaks, it’s safest to assume any secret you input could later influence someone else’s response (albeit unpredictably).

Safe vs. Unsafe Usage Examples

- Safe Use (Public or Non-sensitive): Asking for general advice or information. For example: “What are some healthy dinner ideas?”, “How do I improve my resume?”, or “Explain the concept of photosynthesis.” These queries contain no personal identifiers or confidential data. ChatGPT handles them without privacy concern.

- Unsafe Use (Sensitive Data): Providing personal data or private content. Example: “Here is my private address and phone number – please format them in a letter”, or “Our company’s confidential client list is: [names]. Give me suggestions based on it.” These would be unsafe because you’re feeding PII or corporate secrets into ChatGPT, and that data gets stored on OpenAI’s servers.

- Work Scenario – Good vs. Bad: Good: You tell ChatGPT, “I work at a bank and need a template for an email about account security.” (This is general, no specifics.) Bad: You copy-paste a real customer’s personal data and ask ChatGPT to analyze it or draft an email specific to that person. The latter shares confidential financial info and violates privacy.

- Health/Legal Advice – Not Safe: Even though ChatGPT can discuss medical or legal topics, you should never upload your own medical records or legal documents. Instead, keep questions abstract (“What are common treatments for X?”) rather than specific to your personal file.

- Coding Example: Safe: “How do I solve this generic sorting problem in Python?” Unsafe: Pasting proprietary source code that contains secret API keys or internal logic. The first teaches you a concept, the second risks leaking your intellectual property.

Use common sense: if the prompt includes data you wouldn’t share on social media or in an email without encryption, don’t put it in ChatGPT.

Think of ChatGPT as a public forum where only you and the AI should see the data (plus some OpenAI reviewers) – and handle it accordingly.

Practical Privacy Tips for ChatGPT

- Enable Safe Settings: In ChatGPT’s settings, turn off “Share data to improve ChatGPT” if you see that option. Use Temporary Chat mode for sensitive topics; those chats won’t be used for model training.

- Limit Personal Identifiers: Refer to yourself obliquely. Instead of “I, John Smith, live at 123 Main St,” say “I live on a main street.” Use placeholders like

<name>or pseudonyms. This way even if the text is logged, it isn’t tied to your identity. - Delete Histories: Regularly clear your chat history. In ChatGPT, you can delete individual conversations or “Clear All” to remove old prompts from your account. (They’ll be purged from OpenAI’s active systems within ~30 days).

- Review and Export Data: You have the right to submit a data privacy request via OpenAI’s portal (privacy.) and see what data of yours they have. You can also export your chat logs for offline record-keeping and then delete them from the platform if desired.

- Use Trusted Devices and Connections: Always log in via secure, private networks. Avoid using public computers or Wi-Fi for ChatGPT if you care about privacy. Consider using a VPN to mask your IP address, which adds an extra layer between your identity and ChatGPT.

- Validate Content Carefully: Any answer involving facts or advice (especially medical, legal, or financial) should be double-checked with reliable sources. Don’t send truly confidential data expecting a secure solution. Instead, abstract out the problem or discuss hypotheticals.

- Prefer Enterprise/Private Options for Work: If you’re a business user, use ChatGPT Enterprise or a specialized AI tool designed for data privacy. These versions keep corporate data out of model training by default and let admins manage retention.

- Educate Yourself and Teams: Familiarize yourself and colleagues with AI privacy policies. Share guidance like “5 things not to share with ChatGPT” to avoid accidental leaks. For example, AgileBlue’s list of banned content for ChatGPT includes exactly the sensitive categories we discussed.

By following these tips, you can enjoy ChatGPT’s benefits while minimizing privacy risks. Always assume that anything you type may persist in some form, and act accordingly.

Key Takeaways

- Data Collected: ChatGPT logs prompts, account info, IP/device data, and file uploads. (See embedded infographic.)

- Usage: Free ChatGPT may use your inputs to train models; enterprise/business accounts do not by default.

- Human Review: Authorized staff can see content for abuse review or improvement – so avoid sensitive data.

- Don’t Share: Never give ChatGPT your SSN, passwords, private keys, personal health records, or confidential business plans.

- Other AI Tools: Google’s Bard (Gemini) also collects your chats (to improve services) and retains them up to 18 months by default. Anthropic’s Claude is more privacy-focused: it does not train on user chats unless you opt in.

- Safety: ChatGPT is safe for general queries. It’s not an isolated vault – treat it like a secure app with caution. Use built-in privacy features, delete logs regularly, and never rely on it for secret or critical information handling.

- Protect Yourself: Use pseudonyms, a VPN, and clear your history. For sensitive tasks, use specialized enterprise AI services or on-premises models.

ChatGPT and similar AI tools are evolving rapidly. By staying informed of the latest privacy policies and following best practices, you can leverage these technologies without compromising your personal or business data.

Ready to learn more about using AI safely? Explore GPT-Gate.Chat for tips, guides, and tools on privacy-conscious AI.

Our resources will help you harness ChatGPT’s power while keeping your sensitive information secure. Embrace AI responsibly – keep your data private and your mind at ease!